Databricks - Genie with Metric Views

In my previous post, I talked about why Metric Views matter and how they help organisations move toward governed, consistent reporting.

In this blog, we’ll take the next step - we shall:

Create a Metric View → Build a Genie on top of it → Feed Metric Views into Genie → Benchmark the results.

If you're curious about how to combine governed metrics + natural-language access + quality validation, this one’s for you.

In my example today, I am considering the superstore data set.

Step 1: Add the data to Unity Catalog and register it as a table

I started by uploading the Superstore CSV directly through the Databricks UI. Using the default schema, I imported the file and created a managed table, nice and simple. (If you need a quick refresher, the GIF here walks through this flow.)

Step 2: Create a metric view on top of the table

With the table in place, the next step is to build a metric view. There are several ways to register metric views. In the previous blog, I showed how to do it through the UI.

If you were to register the Metric View using SQL, check your runtime to be >=17.2. Currently am on 15.4 runtime, and so I would still be following the UI method for now.

Click ‘Create’ from the same location where you created the table, then select ‘Metric View’ from the drop-down. Modify the metric view as needed. Once done, give a name and click save

Step 3: Build a Genie on top of the Metric View

With the metric view ready, the next step is to bring it into Databricks Genie. Genie allows you to ask natural-language questions over your metric views while ensuring consistency and governance.

Here, i have just taken a generated SQL in the Ground truth SQL, but you can use any relevant tables of choice and create your own ground truth SQL

Here’s how I set it up:

Open Genie from the left-hand Databricks menu.

Click Create Genie and give it a name. In my example, I called it “super_store genie”.

Under Data Sources, add the metric view you just created.

Now ask it a question in plain English and see the magic Genie brings

Note: Just because you ask a question, it doesn’t guarantee the results will always be correct. Genie works best when the metric view is well-defined, instructions are clear, and synonyms for ambiguous terms are provided.

Step 4: Benchmark the results

Once your Genie is set up and connected to the metric view, the next step is to validate its performance and accuracy. Benchmarking ensures that the natural-language answers Genie provides are trustworthy and aligned with the underlying data.

Here’s how I approached it:

Define test prompts:

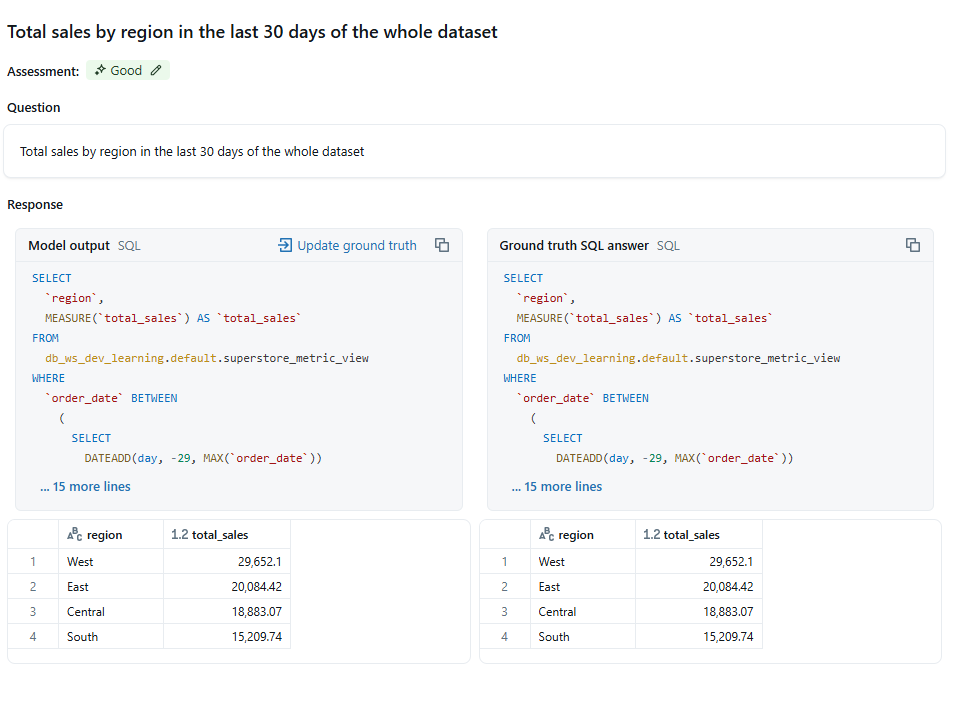

Prepare a list of sample questions that a business user might ask. For example:“Total sales by region in the last 30 days of the whole dataset”

“Average discount by segment”

“Number of unique orders in January 2025”

“Top 5 categories by profit”

Capture Genie’s SQL output:

For each prompt, let Genie generate the SQL query behind the scenes. This allows you to verify the logic against the metric view and ensure that it is using the correct metrics and dimensions.Compare results against a gold standard:

Execute the SQL manually or using pre-validated queries and compare the results with what Genie returns. Track any discrepancies. You add the Ground truth SQL to the benchmark and add that benchmarkClick on Evaluations, and you can see the accuracy of each prompt.

Based on your benchmarking results, you can always improve by:

Refining Genie instructions for ambiguous terms.

Adding synonyms for metrics, dimensions, or business terminology.

Updating the metric view if calculations or aggregations need adjustment.

Benchmarking is not just about numbers; it’s about trust. By validating Genie’s answers, you ensure that business users can rely on governed, consistent metrics while benefiting from natural-language access.

Wrapping Up

In this post, we saw how to go from raw Superstore data to metric views and then use Databricks Genie to ask questions in plain English. We also learned how to benchmark Genie’s results to ensure accuracy and trust. Hope you play around with a little and let me know what magic you have experienced. Until then, happy learning!