Gen AI Made Simple : Prompt Engineering

In my previous blog, we explored the basics of Generative AI, what it is, how it works, etc. Now, I’m taking it a step further with a new series where I’ll dive deeper into the building blocks that make AI truly powerful.

In this series, we’ll explore topics like Prompt Engineering, Retrieval-Augmented Generation (RAG), Agents, Fine-tuning, Dataset Engineering, Inference Optimization, and more. The idea is simple: as I learn and experiment with these concepts, I’ll share practical insights, real-world examples, and lessons that you can apply too.

Today, let’s dive into understanding some of the best practices of Prompt Engineering:

Prompt Engineering: Models can do many things, but you must tell them exactly what you want.

The process of crafting an instruction to get a model to do what you want is called Prompt Engineering.

This may sound simple, but in practice it requires clarity, structure, and iteration. Think of it like giving directions to a competent assistant who knows a lot but can’t read your mind. A vague request leads to vague answers. A precise, step-by-step request leads to accurate and actionable output.

Having experienced this myself, I no longer underestimate the power of a well-crafted prompt.

Defining the task: A crystal-clear task prevents wasted iterations. If the model doesn’t know the job, it can’t do it well.

Identifying the inputs: The rule is simple, Good inputs = better context = better answers

Creating Detailed Prompts: Clear and detailed prompts help the model understand exactly what you expect.

Refining the prompts: Refining prompts means improving your question step by step so the AI understands you better. You try something, see what answer you get, and then make small changes until the answer is just right.

Prompt Engineering Best Practices:

Did you know, each model has a different set of best practices? That is why different models “understand” and respond to instructions slightly differently. Even though when you give the same prompt to different models, they provide different outputs.

Why?

Different models have different brains (or more accurately, different training data and architectures). That means:

They learn from different examples.

Each model was trained on unique data. Some saw more code, some saw more stories, some saw more academic text. So, the style of question they understand best varies.

They’re tuned for different goals:

GPT models are fine-tuned to follow instructions clearly.

Claude is tuned for long-form reasoning and empathy. You can even use XML tags to write a prompt for Claude.

Gemini is optimized for multi-modal reasoning (text + image).

Should you wish to look more in-depth and different best practices for different models, take a look at this.

This section focuses on general techniques that have been proven to work with a wide range of models and will likely remain relevant in the near future.

Write Clear and Explicit Instructions:

Communicating with AI is the same as communicating with humans; always, clarity helps. Explaining without ambiguity what you want the model to do is a clear art, in my opinion

If you want the model to score an essay, explain the scoring system you want to use. If the model outputs a fractional output of 4.5, ask the model to output only integer scores.

Adopt a Persona:

Sometimes, giving the model a specific role or perspective (a “persona”) helps it generate more relevant and accurate responses. By adopting a persona, you set the context for how the AI should think and respond.

For example:

Instead of asking “Explain SQL joins”, you could say:

“You are a friendly data engineering instructor explaining SQL joins to a beginner. Use simple language and real-world analogies.”

This approach helps the model tailor its explanation to the intended audience and style, leading to outputs that feel more useful and natural.

Provide Examples:

Examples act as a blueprint for the AI. They reduce ambiguity and show the model exactly how you want it to respond. Instead of just giving instructions, you also give a sample response that sets the tone, style, and format.

For instance:

If you want the AI to summarize text in bullet points, you can say:

“Summarize the following article into three concise bullet points. Example:Key takeaway one

Key takeaway two

Key takeaway three”

If you want the AI to format answers like a Q&A, you can instruct:

“Answer in this format:

Q: What is the capital of France?

A: Paris”By providing examples, you’re not leaving room for guesswork; the model knows exactly what the response should look like.

Giving examples is known as few-shot learning. When you’re providing a few examples to guide the model, then it’s called few-shot learning. If you provide no examples, the model tries to generate output purely from instructions, and this is called zero-shot learning.

Break Complex Tasks into Simpler Subtasks:

Complex tasks can overwhelm both humans and AI. Breaking them into smaller, manageable subtasks improves accuracy.

For example, instead of asking the AI to “Plan a database migration,” you can break it down into subtasks:

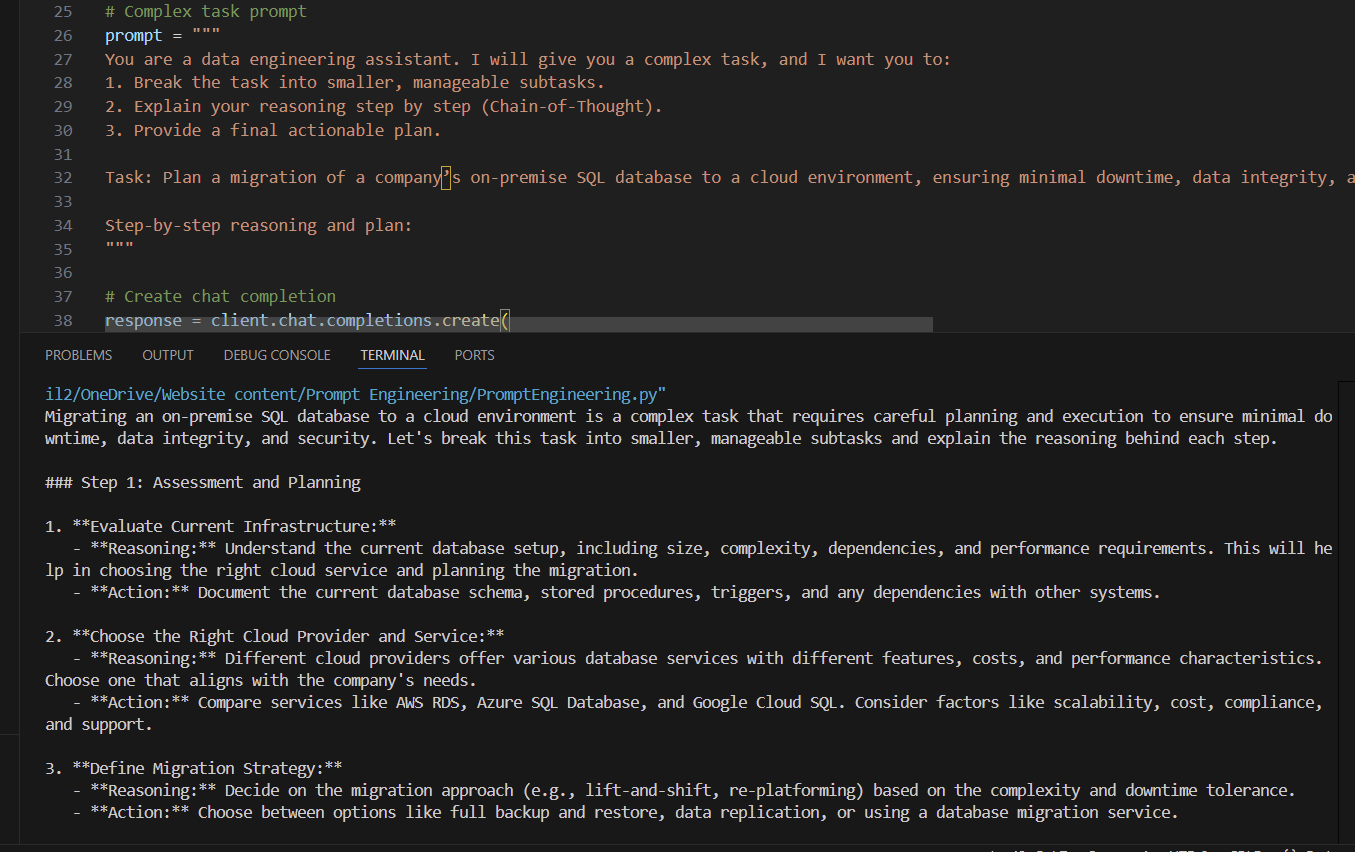

If you look at the screenshot below, I have asked the model to break down the task into smaller, manageable tasks and explain the reasoning step by step.

In the task, I have clearly provided how its chain of thought should actually be.

The model has provided me with a step-by-step approach to deal with this user story.

Wrapping Up:

Prompt engineering is both an art and a science. By writing clear instructions, adopting personas, providing examples, breaking tasks into subtasks, and using techniques like few-shot, zero-shot, and chain-of-thought, you can unlock the full potential of AI models.

I hope you gained some useful takeaways today. This is my naïve effort to simplify these concepts, and I look forward to sharing more in the upcoming posts of this series.